Getting Started with the Stats HPC¶

This introductory guide explains how to use the system-wide PyTorch and TensorFlow Conda environments, using the example of running Slurm jobs on the srf_gpu_01 cluster.

Overview¶

The Department of Statistics HPC clusters are:

srf_cpu_01 (shared CPU cluster)

srf_gpu_01 (shared GPU cluster)

swan (For research groups)

At present, two preconfigured Conda environments are available on the srf_gpu_01 cluster:

/opt/conda/envs/pytorch-2025a (PyTorch, GPU-enabled)

/opt/conda/envs/tensorflow-2025a (TensorFlow, GPU-enabled)

Connecting to the Stats HPC¶

To copy your files to the Stats HPC and to connect to the HPC, please check the Intro HPC & Linux presentation slides, especially p.8-11.

Running your test PyTorch Slurm job¶

Please copy the file test_pytorch_gpu.sbatch and/or test_tensorflow_gpu.sbatch (see below) to the slurm-hn02 login node as shown in the Intro to HPC & Linux presentation slides. These sbatch files are Slurm job files.

1#!/bin/bash

2#SBATCH --job-name=test_pytorch_gpu

3#SBATCH --mail-user=<YOUR-EMAIL-ADDRESS>

4#SBATCH --mail-type=BEGIN,END,FAIL

5#SBATCH --partition=standard-gpu

6#SBATCH --clusters=srf_gpu_01

7#SBATCH --gres=gpu:1

8#SBATCH --cpus-per-task=2

9#SBATCH --mem=4G

10#SBATCH --time=00:10:00

11#SBATCH --output=test_pytorch_gpu_%j.out

12

13# Load the Conda module

14module load conda

15

16# Source conda.sh for non-interactive shell

17source /opt/conda/etc/profile.d/conda.sh

18

19# Activate PyTorch environment

20conda activate /opt/conda/envs/pytorch-2025a

21

22# Display GPU information

23echo "Running on host: $(hostname)"

24echo "CUDA_VISIBLE_DEVICES: $CUDA_VISIBLE_DEVICES"

25nvidia-smi

26

27# Run a PyTorch CUDA test

28python3 - <<'EOF'

29import torch

30print("PyTorch version:", torch.__version__)

31print("CUDA available:", torch.cuda.is_available())

32if torch.cuda.is_available():

33 print("Using GPU:", torch.cuda.get_device_name(0))

34 x = torch.rand(1000, 1000, device="cuda")

35 y = torch.rand(1000, 1000, device="cuda")

36 print("Tensor sum (GPU):", (x + y).sum().item())

37else:

38 print("Running on CPU.")

39EOF

40

41sleep 300

1#!/bin/bash

2#SBATCH --job-name=test_tensorflow_gpu

3#SBATCH --mail-user=<YOUR-EMAIL-ADDRESS>

4#SBATCH --mail-type=BEGIN,END,FAIL

5#SBATCH --partition=standard-gpu

6#SBATCH --clusters=srf_gpu_01

7#SBATCH --gres=gpu:1

8#SBATCH --cpus-per-task=2

9#SBATCH --mem=4G

10#SBATCH --time=00:10:00

11#SBATCH --output=test_tensorflow_gpu_%j.out

12

13# Load the Conda module

14module load conda

15

16# Source conda.sh for non-interactive shell

17source /opt/conda/etc/profile.d/conda.sh

18

19# Activate TensorFlow environment

20conda activate /opt/conda/envs/tensorflow-2025a

21

22# Display GPU information

23echo "Running on host: $(hostname)"

24echo "CUDA_VISIBLE_DEVICES: $CUDA_VISIBLE_DEVICES"

25nvidia-smi

26

27# Run a TensorFlow CUDA test

28python3 - <<'EOF'

29import tensorflow as tf

30print("TensorFlow version:", tf.__version__)

31print("GPU available:", tf.config.list_physical_devices('GPU'))

32if tf.config.list_physical_devices('GPU'):

33 print("Using GPU:", tf.config.list_physical_devices('GPU')[0])

34 with tf.device('/GPU:0'):

35 a = tf.random.normal((1000, 1000))

36 b = tf.random.normal((1000, 1000))

37 c = tf.add(a, b)

38 print("Tensor sum (GPU):", tf.reduce_sum(c).numpy())

39else:

40 print("Running on CPU.")

41EOF

42

43sleep 300

You will need to use a command-line intereface (CLI) text editor to open the files. If you have never used a Linux CLI text editor, nano is a great option for beginners. Here is a YouTube video that does a great job of introducing you to nano: https://www.youtube.com/watch?v=g2PU–TctAM

In the sbatch files above you see a number of #SBATCH lines. These are Slurm job parameters that will define how Slurm is going to run your job on the cluster. You can edit these to your requirements. For now, I recommend you only change the ‘–mail-user’ parameter to your email address and submit the Slurm job.

Explanation of #SBATCH Options¶

–job-name Job name shown in queues/logs. –mail-user Email address to where you want to receive Slurm notifications. –mail-type Events to notify in the Slurm email (BEGIN, END, FAIL). –clusters Which cluster you want to run your Slurm job (eg. srf_gpu_01) –partition Partition/queue (e.g., standard-gpu). –gres=gpu:1 The number of GPU you’re requesting, eg 1 GPU. –cpus-per-task The number of CPU cores you want assigned per task. –mem How much memory allocation you’re requesting (e.g., 4G). –time Maximum runtime/timelimit (HH:MM:SS). –output Where you want Slurm to output/write the Slurm job log file (%j = job ID).

The PyTorch test Slurm job¶

l.14 loads the cluster’s system-wide Conda module and then sources its config in l.17. After that, l.20 activates your PyTorch environment inside your Conda. If you are new to using Conda and PyTorch in this way, it’s recommended you do not change l.15-20.

Ideally, the system Conda + PyTorch is sufficient for your needs. If it’s missing anything or not working as expected, please let me know at ithelp@stats.ox.ac.uk.

l.23-24 are self-explanatory, I think. echo is the print command for Linux. And l.25 the nvidia-smi command, is a common way for you to query information about the GPUs on the cluster. You will find the output of all these commands in your Slurm job’s log file (see below).

And l.28-41 is just a basic PyTorch script to check whether PyTorch detects the GPU. Later on, you would either paste your PyTorch script into the Slurm job file, or just replace l.29-40 with the path to the Python file, eg:

python3 my-pytorch-script.py

The sleep command in l.41 is not necessary for you to use, going forward. I just included it, to allow you the time to view your job running in the queue. The PyTorch script I used here is too basic to require much computing power, and so the cluster would complete the job before you had the time to run the squeue command (see below). That is why I’ve included sleep 300, which means “Do nothing, and just wait for 300 seconds.”

Submitting the Slurm job¶

On the login node (eg slurm-hn02) type the Slurm batch job submission command into the terminal:

For the PyTorch sbatch file:

sbatch test_pytorch_gpu.sbatch

For the TensorFlow sbatch file:

sbatch test_tensorflow_gpu.sbatch

When you press ENTER, if everything went well, the terminal will return a message like: Submitted batch job 9616 on cluster srf_gpu_01 Each job is assigned a unique job ID by Slurm. In the above example, the job ID is 9616.

Viewing the Slurm job queue¶

Now that your job is running, you can view its status in the job queue:

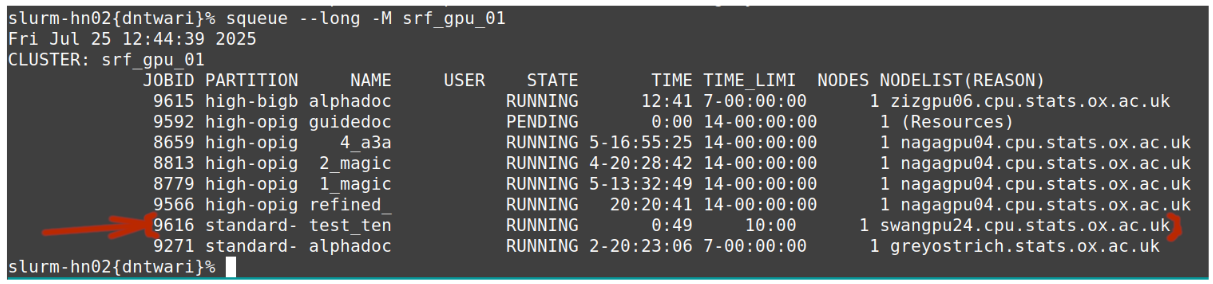

squeue --long -M srf_gpu_01

Now that your job is running, you can view its status in the job queue: .. code-block:: bash

squeue –long -M srf_gpu_01

In the screenshot you can see Slurm job 9616 running on the srf_gpu_01 cluster. If you see something similar for your job, congratulations, you’ve just submitted your first Slurm job to the Department of Statistics HPC!

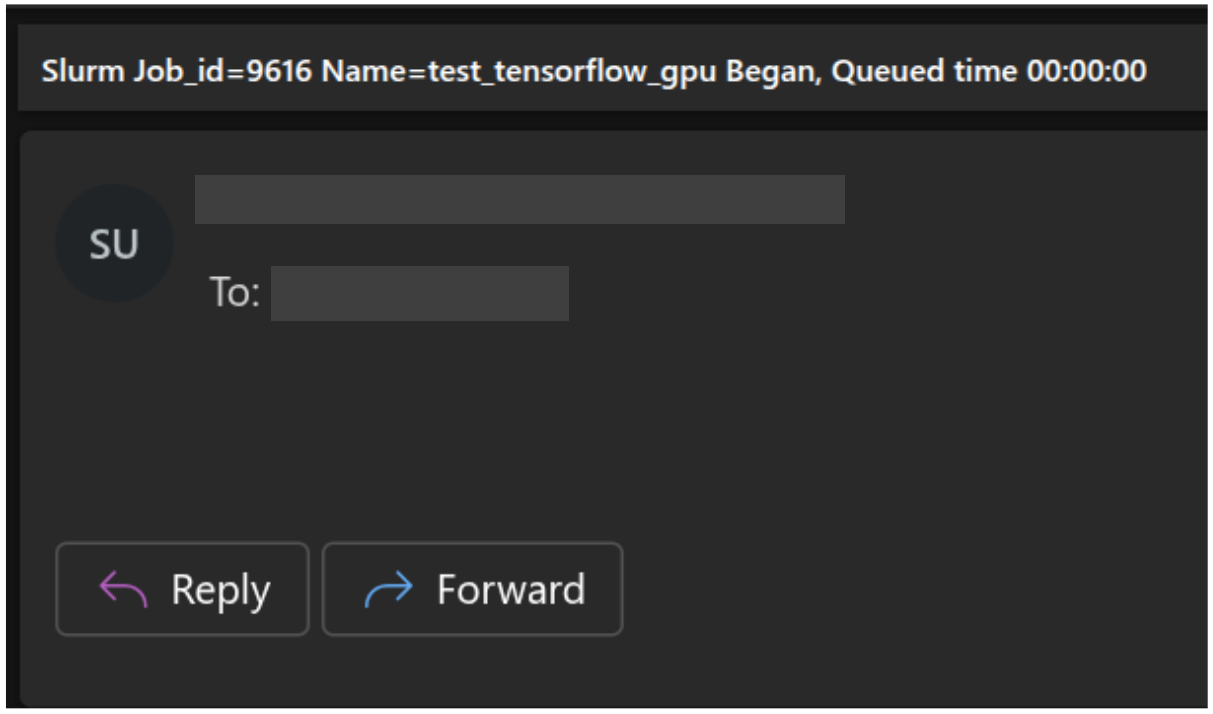

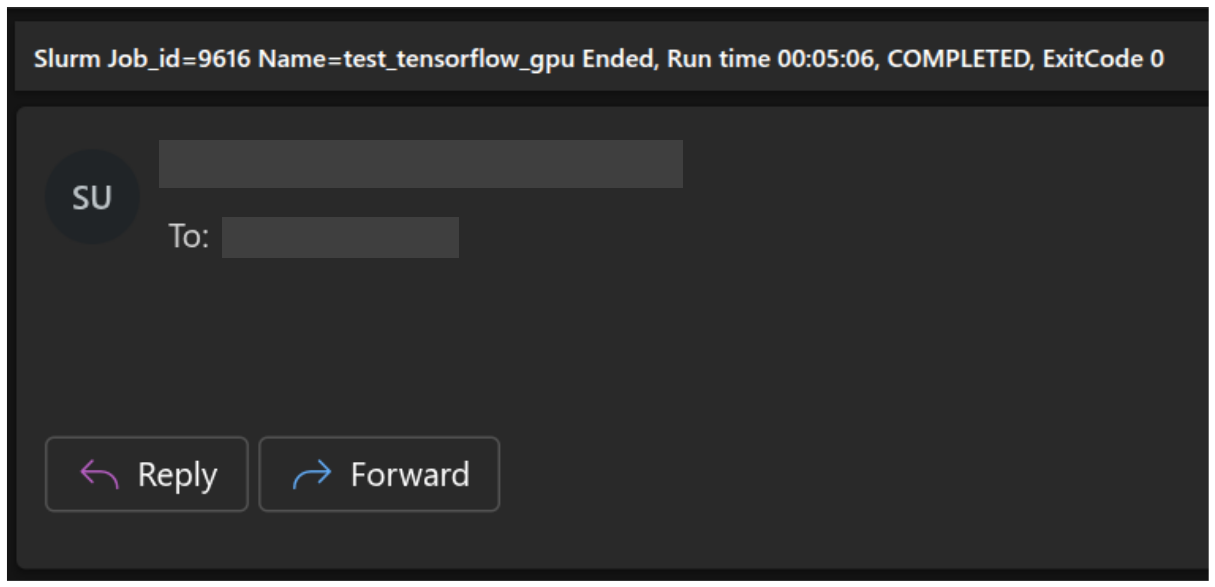

If you set your email in the Slurm job file, you will have received two emails from the HPC. One at the beginning of the job, when you submitted the job and Slurm ran it on the HPC, and one at the end of the job, when the job completed.

Job begins:

Job completed:

Reviewing your job results¶

Once this test Slurm job has completed, you can open (eg with nano) the Slurm job log file you defined in the job file. In this example, it’s located in the folder myslurmlogs/ and is called test_pytorch_gpu_9616.out <– is the Slurm job ID I received when I ran the job at the time of writing this tutorial. Your individual Slurm job IDs will be different and unique.

$ nano ~/myslurmlogs/test_pytorch_gpu_......out

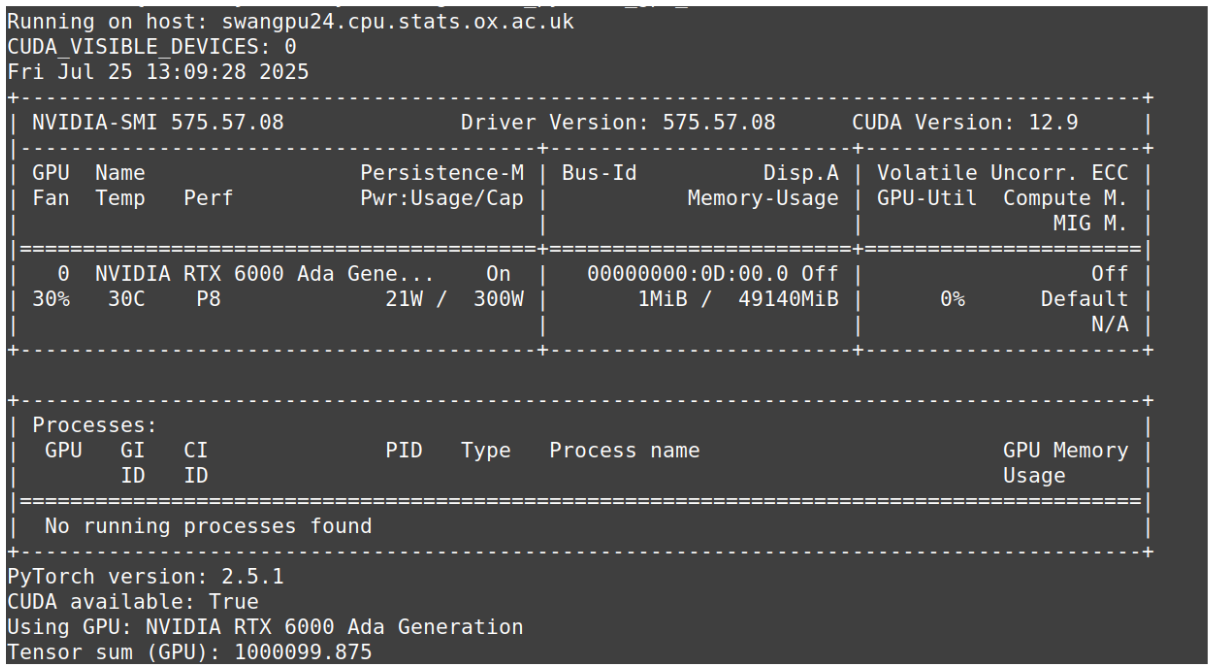

Output of test_pytorch_gpu_9616.out:

The first two lines in the above screenshots are the outputs of: l.23 echo “Running on host: $(hostname)” l.24 echo “CUDA_VISIBLE_DEVICES: $CUDA_VISIBLE_DEVICES”

And then from “Fri Jul 25…” to “No running processes found..” you have the output of the nvidia-smi command, which is showing the GPU information for the cluster node this Slurm job ran on. Which in this case was swangpu24.cpu.stats.ox.ac.uk. And the remaining lines are from PyTorch.

If you need similar guides or software installations on the HPC, please let me know at ithelp@stats.ox.ac.uk

Useful links on Getting Started with Linux, HPC, and Parallel Programming for AI/ML and HPC¶

Linux¶

Introduction to Linux (LFS101) https://training.linuxfoundation.org/training/introduction-to-linux/

HPC¶

Introduction to Parallel Computing Tutorial https://hpc.llnl.gov/documentation/tutorials/introduction-parallel-computing-tutorial

Python (with PyTorch)¶

Getting Started with Distributed Data Parallel https://docs.pytorch.org/tutorials/intermediate/ddp_tutorial.html

Introduction to High-Performance Computing in Python https://www.hpc-carpentry.org/hpc-python/

R (programming language)¶

R doParallel: A Brain-Friendly Introduction to Parallelism in R https://www.appsilon.com/post/r-doparallel

CRAN Task View: High-Performance and Parallel Computing with R https://cran.r-project.org/web/views/HighPerformanceComputing.html